In this post I will criticize an experiment attempting to show the existence of a mind-over-matter effect. My criticism does not at all arise from any disbelief in the existence of psi phenomena such as telepathy and ESP. I have written many posts presenting the evidence for ESP and clairvoyance, which you can read by reading the series of posts here and here, and continuing to press Older Posts at the bottom right. Later in this post I will give a bullet list linking to some of the best evidence for paranormal psi effects. I regard the evidence for ESP (telepathy) and clairvoyance as being very good.

The experiment I refer to is described in the paper "Enhanced mind-matter interactions following rTMS induced frontal lobe inhibition" which you can read here. The authors start out by giving some speculative reasons for thinking that the brain may suppress or inhibit paranormal powers of humans. My concern that the authors have gone astray begins when I read the passage below:

"Based on our findings in the two individuals with damage to their frontal lobes, we adopted a new approach to help determine whether the left medial middle frontal region of brain acts [as] a filter to inhibit psi. This was the use of repetitive transcranial magnetic stimulation (rTMS) to induce reversible brain lesions in the left medial middle frontal region in healthy participants."

Inducing "reversible brain lesions?" That sounds like dangerous fiddling that might go wrong and cause permanent damage to the brain. On this blog I have strongly criticized neuroscience experiments that may endanger human subjects, in the series of posts you can read here and here. I must be consistent here, and criticize just as strongly parapsychologists using similar techniques. There are very many entirely safe ways to test whether humans have paranormal psychical abilities, and I describe one of them later in this post. So I don't understand why anyone would feel justified in zapping someone's brain in an attempt to provide evidence for such abilities. There's no need for such high-tech gimmickry. Abilities such as mind-over-matter and telepathy can be tested in simple ways that do not have any reliance on technology. I would strongly criticize any conventional neuroscience experiment that claimed to be inducing "reversible brain legions." I must just as strongly attack this experiment for doing such fiddling.

The paper describing the experiment tells us about some experimental setup in which people were asked to change the output of a random number generator generating numbers of 0 and 1, by looking at a computer screen showing an arrow, and willing the arrow to move in a particular direction. The idea was that if there were a lot more 0 numbers than 1 numbers, an arrow on the middle of a screen would move towards one end of the screen; and if there were a lot more 1 numbers than 0 numbers, an arrow on the middle of a screen would move towards another end of the screen.

We have some paragraphs describing the way the data was analyzed, and the results. Here the paper fails to follow a golden rule for parapsychology experiments. That rule is: keep things as simple as possible, so that readers will well-understand any impressive results achieved, and so that readers will have a minimum tendency to suspect that some statistical sleight-of-hand is occurring. The importance of this rule cannot be over-emphasized. Skeptics and materialist scientists start out by being skeptical of claims of paranormal abilities. The more complex your experimental setup and data analysis, the easier it will be for them to ignore or belittle your results.

There is a golden rule of computer programming, the rule of KISS, which stands for Keep It Simple, Stupid. People doing parapsychology experiments should follow the same rule. The more complex the experimental setup, the easier it will be for skeptics to suggest that some kind of "smoke and mirrors" was going on.

The authors of the paper "Enhanced mind-matter interactions following rTMS induced frontal lobe inhibition" have violated this rule. They give us some paragraphs of statistical gobbledygook about their results, and fail to communicate an impressive result in any way that even 10% of their readers will be able to understand. I myself am unable to understand any result in the paper providing impressive evidence of a mind over matter effect. If there is any such result, the authors have failed to effectively describe it in a way the general public can understand.

The authors state this:

"As predicted by our a priori hypothesis, we demonstrated that healthy participants with reversible rTMS induced lesions targeting the left medial middle frontal brain region showed larger right intention effects on a mind-matter interaction task compared to healthy participants without rTMS induced lesions. This significant effect was found only after we applied a post hoc weighting procedure aligned with our overarching hypothesis."

It sounds like the raw results of their experiment failed to show a significant effect, but that by "calling an audible" after gathering data to try to "save the day" by introducing some unplanned statistical trick, the authors were able to claim a significant result. This sounds like the kind of sleazy maneuver that neuroscientists so often use to try to gin up a result showing "statistical significance." Instead of acting like badly behaving neuroscientists, our authors should have used pre-registration (also called the use of a registered report). The authors should have published a very exact plan on how data would be gathered and analyzed, before gathering any data. They then should have stuck to such a plan. If this resulted in a non-significant effect or null result, they should have called that result a non-significant effect or null result.

Parapsychologists should not be aping the bad habits of neuroscientists, whether it be zapping brains in a potentially dangerous way, or following "keep torturing the data until it confesses" tactics. Parapsychologists should be following experimental best practices.

The experimental evidence for telepathy (also called extra-sensory perception or ESP) is very good. We have almost two hundred years of compelling evidence for the phenomenon of clairvoyance, a type of extrasensory perception occurring when a person is asked to describe something he cannot see and does not know about. It is not correct that serious study of this topic began about 1882 with the founding of the Society for Psychical Research in England, as often claimed. Serious rigorous investigation of the topic of clairvoyance dates back as far as 1825, with the 1825-1831 report of the Royal Academy of Medicine finding in favor of clairvoyance. Serious scholarly investigation of clairvoyance occurred many times between 1825 and 1882. Such investigations often involved subjects who were hypnotized, with many investigators reporting clairvoyance from hypnotized subjects or subjects who were in a trance. Experimental investigation of telepathy occurred abundantly in the twentieth century, with many university investigators such as Joseph Rhine of Duke University reporting spectacular successes.

You can read up about some of the evidence for such things by reading some of my posts and free online books below:

There is a simple way for you to test this subject yourself, by doing quick tests with your friends and family members. I will now describe a quick and simple way of doing such tests that I have found to be highly successful, as I report in another post here. I have no idea whether you will get similar success yourself, but I would not be surprised if you do. Below are some suggestions:

(1) Test ideally using family members or close friends. I don't actually have any data showing that tests of this type are more likely to be successful using family members or close friends, but I can simply report that I have had much success testing with family members.

(2) Ask the family member or friend to guess some unusual thing that you have seen with your eyes or seen in a dream. Announce this simply as a guessing game, rather than some ESP or telepathy test. For example, you might say something like, "I saw something unusual today -- I'll give you four guesses to guess what it was." Or you might say, "I dreamed of something I rarely dream of -- I'll give you four guesses to guess what it was."

(3) Do not give any clues about your guess target, or give only a very weak clue. Your ESP test will be trying to find some case of a guess matching a guess target, with such a thing being extremely improbable. You will undermine such an attempt if you give any good clue, such as "I'm thinking of an animal" or "I'm thinking of something in our house." If you give a clue, give only a very weak one such as "I saw something unusual on my walk today, can you guess what it was," or "I had a dream about something I rarely dream of, can you guess what it was."

(4) Be sure to suggest that the person try three or four guesses rather than a single guess. I have noticed a strong "warm up" effect when occasionally trying tests like this. I have noticed that the first guess someone makes usually fails, but that the second or third guess is often correct or close to the answer. For example, not long ago I said to one of my daughters, "You'll never guess what I saw down the street." I gave no clues, but asked her to guess. After a wrong guess of an orange cat, her second guess was "a raccoon," which is just what I saw. No one in our family had seen such a thing on our street before. Later in the day I asked her what I saw in a weird dream I recently had, mentioning only that it involved something odd in our front yard. After a wrong first guess of a snowman, she asked, "Was it a wild animal?" I said yes. Then she asked, "Was it an elephant?" I said yes.

(5) After the person makes the first guess, suggest that the person take 10 seconds before making each of the next guesses. Throughout the entire guessing session, you should be trying hard to visualize the thing you are asking the person to guess. Slowing the process down by suggesting 10 seconds between guesses may increase the chances of your thought traveling to the subject you are testing.

(6) Only test using a guess target that is some simple thing that you can clearly visualize in your mind. Do not test using a guess target of some complicated scene involving multiple actions or interacting objects. For example, don't ask someone to guess some scene you saw that involved someone dropping his coffee and spilling it on his feet. Use a guess target of some single object or a single animal or a single human. Testing with types of animals seems to work well. If the test object or animal has a strong color or some characteristic action, all the better. Do not test using some extremely common sights such as your family dog. Success with such a test will not be very impressive. It's better to use a rarer sight, maybe something you see as rarely as a donkey or a racoon.

(7) Answer only yes or no questions, counting each question as one of the three or four allowed guesses. You can include a single "You're getting warm" answer instead of a "no" answer, but no more than one.

(8) Very soon after the test, write down the results, recording all guesses and questions, and any responses you made such as "yes" or "no." With testing like this, the last thing you want to rely on is a memory of some event happening weeks ago. Write down the results of your test, positive or negative, within a few minutes of an hour of making the test.

(9) Do a single test (allowing three or four guesses) only about once every week or two weeks. There may be a significant fatigue factor in such tests. A person who does well on such a test may not continue to do well if you keep testing him on the same day. To avoid such fatigue and to avoid annoying people with too many tests, it is good to just suggest a casual test as described above, once every week or two weeks. Keep a long-term record of all tests of this type you have done, recording failures as well as successes.

(10) It's best not to announce the test as an ESP test or as a telepathy test, but to describe it as a quick guessing game or a test of chance. Our materialist professors have senselessly succeeded in creating very much unreasonable prejudice and bias against psychic realities that are well-established. So the mere act of announcing an ESP test may cause your subject to raise mental barriers that may prevent any successful result. To avoid that, it is best to describe your test as a quick guessing game or a test of chance.

(11) It's best to choose a guess target that you personally saw either in reality or in a dream. The more personal connection you have with the guess target, the better. Something that you personally saw recently (either in reality or a dream) may work better than something you merely chose randomly. The more your recent sensory experience of the guess target, the better it is. Choosing a guess target of something you both saw and touched may work better than something you merely saw. The more you have thought about the guess target, the better. It's better that the object have one or two colors than many colors, and the brighter the color is, the better.

(12) Be cautious in publicly reporting successful results. I would wait until you get three or four good successful tests before reporting anything about such tests on anything like social media. Also, avoid reporting your results as evidence of anything, unless you have something very impressive to report. Social media has a horde of skeptics ready to attack you if you claim evidence for ESP based on slim results. A good rejoinder to such attacks is if you can say, "I'm not claiming anything, I'm just reporting what happened.

Above we see some guess targets that were successfully guessed after only a few guesses, in trials in which the guesser was not told that the item was an animal or anything living. There were about nine trials, with one or two other trials being unsuccessful, and one being a partial success. The guess targets were only in my mind, and I compiled the visual above only after these items were guessed correctly.

Tests that you do of this type will be unlikely to ever constitute any substantial contribution to the literature of parapsychology, unless you follow a very formal approach with an eye towards making such a contribution. But such tests may have the effect of helping you to realize or suspect extremely important truths about yourself and other human beings that you might never have realized. A person might read a dozen times about experiments suggesting something, but the truth of that thing may never sink in until that person has some first-hand experience with the thing.

Whether ESP or telepathy can occur is something of very high philosophical importance. There is a reason why materialists show a very dogmatic refusal to seriously study the evidence for telepathy. It is that if telepathy can occur, the core assumptions of materialism must be false. Telepathy could never occur between brains, but might be possible between souls. So any personal evidence you may get of the reality of telepathy can be a very important clue aiding you in your philosophical journey towards better understanding what humans are, and what kind of universe we live in.

Using a binomial probability calculator it is possible to very roughly estimate the probability of getting success in a series of about nine tests like the one above. To use such a calculator, you have to have an estimate of the chance of success per trial. With tests like those I have suggested, it is hard to exactly estimate such a thing, because you are choosing a guess target that could be any of 100,000 different things. One reasonable approach would be to assume 100,000 different guess possibilities. The chance of a successful guess in only four guesses can be calculated like this, giving a result of only .00004.

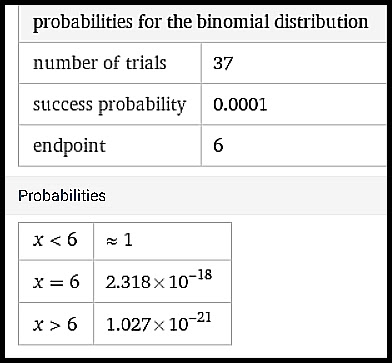

The screen above is using the StatTrek binomial probability calculator, which doesn't seem to work whenever the probability is much less than a million. A similar calculator is the Wolfram Alpha binomial probability calculator, which will work with very low probabilities. I used that calculator with the data described in my post here. The situation described in that post was:

- Each correct guess had a probability no greater than about 1 in 10,000, as I never mentioned the category of what was to be guessed, but always merely asked a relative to guess after saying something like "I saw something today, try and guess what it was" or "I dreamed of something today, try to guess what it was."

- Counting all questions asked (which were all "yes or no" questions) as a guess, there were in about nine guessing trials involving nine targets a total of about 37 guesses.

- Six times the guess target was correctly guessed within a few guesses, and one time the answer was wrong but close (with a final guess of a red bicycle rather than a red double-decker bus, both being red vehicles).

Counting the close guess as a failed attempt, I entered this data into the Wolfram Alpha binomial probability calculator, getting these results (with this calculator the "number of successes" is referred to as the "endpoint"):

Having a probability of less than about 1 in .00000000000000001, it would be very unlikely for anyone to ever get a result as successful by mere chance, even if every person on planet Earth were to try such a set of trials. You can use the same Wolfram Alpha binomial probability calculator to get a rough estimate of the likelihood of results you get.

I mention using a binomial probability calculator above, but just ignore such a thing if you find it confusing, because the use of such a calculator is just some optional "icing on the cake" that can be used after a successful series of tests. The point of the tests I suggest here is not to end up with some particular probability number, but mainly to end up with an impression in your mind of whether you were able to get substantive evidence that telepathy or mind reading is occurring. Such an impression may be a valuable clue that tends to point you in the right direction in developing a sound worldview. Some compelling personal experience with telepathy may save you from a lifetime of holding the widely taught but unfounded and untenable dogma that you are merely a brain or merely the result of some chemical activity in a brain. Getting such experience, you may embark on further studies leading you in the right direction. Keep in mind that a negative test never disproves telepathy, just as failing to jump a one-meter hurdle does nothing to show that people can never jump one-meter hurdles.

In the academic literature of ESP testing, we often read about the use of Zener cards, cards in which there are five abstract symbols. While using such cards has the advantage of allowing precise estimates of probability, there is no particular reason to think that better results will be obtained when using such cards. To the contrary, it may be that impressive results are much less likely to be obtained using such cards, and that ESP tests work better when living or tangible guess targets are used such as a living animal or a tangible object.

A very important point I must reiterate is that when trying tests such as I have suggested, it is crucial to allow for a second, third and fourth guess, with at least ten seconds between guesses (during which the person thinking of the guess target tries to visualize the guess target). In my testing the correct guesses tend to come on the second, third or fourth try.

The results mentioned above are not by any means the best result I have got in a personal ESP test. The beginning of my very interesting post "Spookiest Observations: A Deluxe Narrative" describes a much more impressive result I got long ago, in a different type of test than the type of test described above.