Claims by neuroscientists that they have found "representations" in the brain (other than genetic representations) are examples of what very abundantly exists in biology: groundless achievement legends. There is no robust evidence for any such representations.

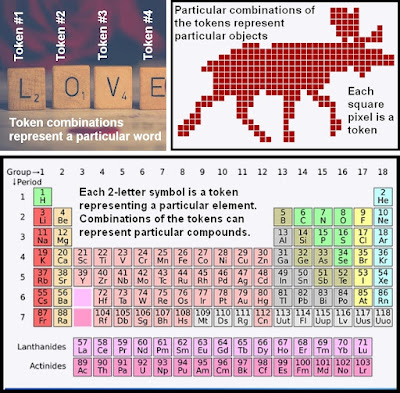

Excluding the genetic information stored in DNA and its genes, there are simply no physical signs of learned information stored in a brain in any kind of organized format that resembles some kind of system of representation. If learned information were stored in a brain, it would tend to have an easily detected hallmark: the hallmark of token repetition. There would be some system of tokens, each of which would represent something, perhaps a sound or a color pixel or a letter. There would be very many repetitions of different types of symbolic tokens. Some examples of tokens are given below. Other examples of tokens include nucleotide base pairs (which in particular combinations of 3 base pairs represent particular amino acids), and also coins and bills (some particular combination of coins and bills can represent some particular amount of wealth).

Other than the nucleotide base pair triple combinations that represent mere low-level chemical information such as amino acids, something found in neurons and many other types of cells outside of the brain, there is no sign at all of any repetition of symbolic tokens in the brain. Except for genetic information which is merely low-level chemical information, we can find none of the hallmarks of symbolic information (the repetition of symbolic tokens) inside the brain. No one has ever found anything that looks like traces or remnants of learned information by studying brain tissue. If you cut off some piece of brain tissue when someone dies, and place it under the most powerful electron microscope, you will never find any evidence that such tissue stored information learned during a lifetime, and you will never be able to figure out what a person learned from studying such tissue. This is one reason why scientists and law enforcement officials never bother to preserve the brains of dead people in hopes of learning something about what such people experienced during their lives, or what they thought or believed, or what deeds they committed.

But despite their complete failure to find any robust evidence of non-genetic representations in the brain, neuroscientists often make groundless boasts of having discovered representations. What is going on is pareidolia, people reporting seeing something that is not there, after wishfully analyzing large amounts of ambiguous and hazy data. It's like someone eagerly analyzing his toast every day for years, looking for something that looks like the face of Jesus, and eventually reporting he saw something that looked to him like the face of Jesus. It's also like someone walking in many different forests, eagerly looking for face shapes on trees, and occasionally reporting a success, or like someone scanning the sky, looking for clouds that look like animal shapes.

The latest example of nonsensical neuroscientist pareidolia is to be found in a press release from Columbia University, and the paper that press release describes in very misleading terms. The press release has the phony headline "Scientists Capture Clearest Glimpse of How Brain Cells Embody Thought." When you read a headline like that, you should remember a sad truth that has been glaringly obvious for many years now: the fact that university press releases on topics of science are no more trustworthy than corporate PR press releases. There is the most gigantic amount of lying, hype and misrepresentation in university press releases these days, and such baloney occurs in equal amounts in the press releases of every major university. So don't think for a moment than you can trust a press release because it came from Harvard or Columbia or Yale or Oxford University. I wish I had a dollar for every bogus press release that has been issued by such institutions. The subtitle of the press release is the 100% untrue claim "Recordings from thousands of neurons reveal how a person’s brain abstractly represents acts of reasoning."

We have an utterly groundless claim by a neuroscientist that he and his colleagues found a “uniquely revealing dataset that is letting us for the first time monitor how the brain’s cells represent a learning process critical for inferential reasoning." We then have an equally groundless claim by another neuroscientist that "this work elucidates a neural basis for conceptual knowledge, which is essential for reasoning, making inferences, planning and even regulating emotions.”

Do the authors claim to have seen some structure in the brain corresponding to these claims? Certainly not. They did not do any brain imaging such as using MRI scans. All they had to work with is EEG readings, readings of brain waves. Would anyone have seen any sign of such claimed representations by visually examining the wavy lines of these EEG readings? Certainly not.

The press release reveals that what is going on is an affair that can be described as "keep torturing the data until it confesses in the weakest voice." We read this:

"The researchers recast the volunteers’ brain activity into geometric representations – into shapes, that is – albeit ones occupying thousands of dimensions instead of the familiar three dimensions that we routinely visualize. 'These are high-dimensional geometrical shapes that we cannot imagine or visualize on a computer monitor,' said Dr. Fusi. 'But we can use mathematical techniques to visualize much simplified renditions of them in 3D.' ”

%for every area in the dataset for i = 1:length(cell_groups) %run analysis for the inference absent sessions idx_current = intersect(cell_groups{i},find(ismember(sessions,inference_absent))); for i_rs = 1:n_resample [avg_array,~] = construct_regressors(neu,n_samples(i),idx_current); [t_1,t_2] = sd(avg_array,n_perm_inner,n_samples(i)); sd_{i,1} = cat(2,sd_{i,1},t_1); sd_boot{i,1} = cat(2,sd_boot{i,1},t_2); [ccgp_{i,1}] = cat(2,ccgp_{i,1},ccgp(avg_array,... n_perm_inner,false,n_samples(i))); [ccgp_boot{i,1}] = cat(2,ccgp_boot{i,1},ccgp(avg_array,... n_perm_inner,true,n_samples(i))); ps_{i,1} = cat(2,ps_{i,1},ps(avg_array,... n_perm_inner,false)); ps_boot{i,1} = cat(2,ps_boot{i,1},ps(avg_array,... n_perm_inner,true)); end %run again for the inference present sessions idx_current = intersect(cell_groups{i},find(ismember(sessions,inference_present))); for i_rs = 1:n_resample [avg_array,~] = construct_regressors(neu,n_samples(i),idx_current); [t_1,t_2] = sd(avg_array,n_perm_inner,n_samples(i)); sd_{i,2} = cat(2,sd_{i,2},t_1); sd_boot{i,2} = cat(2,sd_boot{i,2},t_2); [ccgp_{i,2}] = cat(2,ccgp_{i,2},ccgp(avg_array,... n_perm_inner,false,n_samples(i))); [ccgp_boot{i,2}] = cat(2,ccgp_boot{i,2},ccgp(avg_array,... n_perm_inner,true,n_samples(i))); ps_{i,2} = cat(2,ps_{i,2},ps(avg_array,... n_perm_inner,false)); ps_boot{i,2} = cat(2,ps_boot{i,2},ps(avg_array,... n_perm_inner,true)); end end

Every single piece of data is being passed into a function called construct_regressors(), but what is that function doing? We cannot tell, because the code for that function has not been supplied. We should be suspicious that this construct_regressors() function was doing something so arbitrary and convoluted that the authors were embarrassed to publish the code for that function.

What we have here is something like the situation described in the visual below:

What we have here is something like the situation described in the visual below:

To pass off the results of so vast an amount of data monkeying as a discovery is a case of BS and baloney. No representations in the brain or brain waves have been discovered here. All we have is scientists manipulating and contorting data like crazy, and then displaying some pareidolia by passing off their super-manipulated data as an example of "representations."

Can you imagine what a scandal would arise if climate scientists tried to get away with even one tenth of this amount of manipulating and contorting and distorting their data? Skeptics of their work would start "screaming bloody murder," and howl about how scientists were failing to use their original data, and using instead manipulated, contorted, twisted, distorted data. But it seems that neuroscientists senselessly think that it is okay for them to play around endlessly with data from brains, and that they have the right to contort and twist and distort such data in dozens of different ways, and then pass off the result (an utterly artificial construction) as something they can then call "what the brain does."

What can you call data like this which has undergone so many contortions and manipulations and distortions and transfigurations that it is something almost totally different from the raw data originally gathered? You might be tempted to call it "fake data," but that isn't quite right, because the authors have described all the transformations they did of the data. So rather than calling it "fake data," a description that is not quite right, we can merely say that it is data that has been so enormously contorted and manipulated and transfigured that it is data that cannot be claimed as evidence telling us about brain states or brain activity.

Experimental neuroscience is in a state of great sickness and dysfunction. The use of Questionable Research Practices seems like more the rule in experimental neuroscience than the exception. When scientists think that it is okay for them to perform endless manipulations and contortions and distortions and transfigurations of their data, and to then pass off the resulting artificial mess as "what we got from the brain," then it is a sign that neuroscience has sunk to an extremely low nadir of dysfunction.

Brain waves don't represent anything that someone has learned. Brain waves are no more representations of something learned than clouds are representations of something learned. Brain waves are streams of random data, as random as the stream of clouds passing above a house or a city. Not one iota of evidence of brain representations has been presented in this paper. The paper is entitled "Abstract representations emerge in human hippocampal neurons during inference." An honest title for the paper would have been "We got something we called 'abstract representations' after we manipulated and contorted brain wave readings in dozens of weird ways."

For additional examples of neuroscientists using computer programs to play "keep torturing the data until it confesses," read my post here, entitled "Programming Gone Astray: Iteration Inanity of the Neuroscientists' Distortion Loops."

Hello Mr. Mark Mahin, I hope you are doing well. I have been following your posts for a while now and I like the way you make critical observations of different articles, theories and scientific consensus. Lately, some articles have been published referring to a supposed mechanism of how memories work and are stored. So far, they have not been discussed in your posts. I have read a summary of the articles in some publications on the MindMatters platform, which is also dedicated to providing an alternative view of reality, but in these publications the information in the published articles is not questioned or analyzed. Even after reading this brief summary, I see no justification for the claims made in the scientific articles, based on what I have read in this blog. For example, the article "The brain creates three copies for a single memory" https://www.sciencedaily.com/releases/2024/08/240815163622.htm , from the University of Basel does not provide clear evidence of such a claim, they provide a supposed system of multiple copies of memories in several stages that supposedly helps long-term memory, but then mentions that it was an experiment on mice which obviously we cannot ask what they are remembering and such a study does not say that such a memory was read from those supposed neurons located in the hippocampus corroborating that they stored said information, as well as that the supposed copy neurons made a good copy that contains said memory, making a comparison of information with the first, likewise there is no talk of the tokens that store the information, in short, there are countless observations that can be made and that question the idea raised in the article. There is also the following post https://mindmatters.ai/2020/08/what-neuroscientists-now-know-about-how-memories-are-born-and-die/ , which discusses WHAT NEUROSCIENTISTS NOW KNOW ABOUT HOW MEMORIES ARE BORN AND DIE, the post is quite contradictory in my opinion, the title seems like a famous click bait which seems very disappointing from MinMatters considering that they present themselves as a medium that tries to provide a non-scientist version of the world but makes observations of the flaws in such claims, the headline states that neuroscientists now know how a memory is born and dies but in the body of the post they claim that they are not sure where the memory is located, it is supposedly distributed throughout the brain and the hippocampus is just an intermediary that encodes and assigns routes (Well in a previous study it claims the opposite then there is no consensus apparently, neuroscience is full of contradictions), so if they are not sure where a memory is I remember how they can tell us that they now know how a memory is born and dies if they don't know where it is in the brain, then we continue with a number of theories and hypotheses such as the neuronal firing sequence (The preifolia that you mention in one of your articles) or the famous synapse, proposed by scientists but still no evidence that such a memory was read from a certain neuron, synapse or firing.

ReplyDeleteAs a reader of your blog I would really like to see a post on your blog about these scientific investigations, where we can read your most detailed observations and contrast them with other investigations.

Please forgive the English, I must use a translator since I don't speak English, a warm greeting from Colombia.

Thanks for the comment, Kevin. My latest post has a critique of the poor-quality "3 copies of every memory" study you mention, and shows how it fails to substantiate that claim:

Deletehttps://headtruth.blogspot.com/2024/09/no-they-didnt-find-in-brain-3-copies-of.html

The Mind Matters site has posts by various authors, and posts by different authors may disagree with each other. The year 2020 Mind Matters article you cite is mainly an uncritical parroting of unproven neuroscientist dogmas about a brain storage of memory, dogmas in conflict with much that neuroscientists have discovered. Other articles on the Mind Matters site have been much better at presenting evidence against the materialist claims of neuroscientists.

Cheers.