An extremely common phenomenon in science papers is the practice of faulty citations. It commonly works like this:

- A scientific paper will claim that some dubious assertion is "well-established" or supported by "overwhelming evidence," immediately following such claims by citations.

- A very careful examination of the papers discussed will find either that the authors of the papers did not make the assertion, or that the papers were poorly-designed studies that failed to provide robust evidence to back up the assertion.

Let us look at some examples of such faulty citations. The paper "Learning causes synaptogenesis, whereas motor activity causes angiogenesis, in cerebellar cortex of adult rats" has been cited 1490 times in the neuroscience literature. That's very strange, because the paper describes a poorly designed experiment guilty of quite a few Questionable Research Practices. The paper tells us it used 38 rats in four study groups, stating: "Thirty-eight adult Long-Evans hooded female rats, kept in small groups until 10 months old, were housed individually for 30 days in one of four experimental groups." We are also told that five of the rats had to be "dropped out." This means there were fewer than 10 animals per study group. In experimental studies 15 subjects per study group is the minimum for a modestly reliable experimental result. The authors could have found out that they were using way too few subjects if they had followed good experimental practice by doing a sample size calculation, but they failed to do such a calculation.

The authors also made a very bad violation of good scientific practice by failing to tell us the exact number of subjects in each study group. We see some graph comparing the study groups, and one of the graphs shows one of the study groups (one that did learning) having more "Synapses per Purkinje cell" (although not a higher synaptic density). But exactly how many rats were in this study group? Was it 9, 5, or only 2? We have no idea, because the authors did not tell us how many research subjects (how many rats) were in each of the study groups. This is a glaring violation of good scientific practices. Also, the study fails to follow a blinding protocol. The scientists measuring the synapse numbers should have been blind to which study group the animals were in, but they apparently were not. For such reasons, this study provides zero robust evidence to support the claim in its title that learning causes synaptogenesis (the formation of new synapses).

Let us look at another paper that has been cited more than 4000 times by neuroscientists. The paper is "The Molecular Biology of Memory Storage: A Dialogue Between Genes and Synapses" by Eric R. Kandel. This is not an experimental paper, but a review article. There are red flags near the beginning. The author writes in an autobiographical way, as if he was telling his life story. That is not the standard way in which a scientific review article is written. A review article is supposed to be a dispassionate examination of evidence, without wading into personal matters such as the author's life quest. The article contains 39 uses of "I" and countless other uses of "we." For example, we read, "A decade ago, when I reached my 60th birthday, I gathered up my courage and returned to the hippocampus."

There are some diagrams, but none of them are supported by specific numbers mentioning the exact size of any study group or the number of research subjects used. There are no specific mentions of exact research results that mention how many subjects were researched. This is the exact opposite of how a good scientific review article should be written. A good scientific review should let us know exactly how many subjects were used in all of the experiments it is citing. The article is littered with groundless achievement legends, unsupported claims that some researcher showed X, Y or Z without any specific numerical evidence showing that any such thing was shown. Near the end of the article, the author asks so many questions that it is clear that the title of the paper is inappropriate, and that there is no such thing as a known "molecular biology of memory storage."

What we have here is mainly an autobiographical essay that fails to meet the standards of a good scientific review article. But this essay has been cited more than 4000 times by researchers, just as if were a regular scientific paper.

Another highly cited neuroscience paper is the paper "A specific amyloid-β protein assembly in the brain impairs memory," which has been cited more than 2000 times. A long article in the leading journal Science claims that this paper and some similar papers may have "signs of fabrication." Below is a quote from that article about the protein described in the paper:

"Given those findings, the scarcity of independent confirmation of the Aβ*56 claims seems telling, Selkoe says. 'In science, once you publish your data, if it’s not readily replicated, then there is real concern that it’s not correct or true. There’s precious little clearcut evidence that Aβ*56 exists, or if it exists, correlates in a reproducible fashion with features of Alzheimer’s—even in animal models.' ”

Another highly cited neuroscience paper is "The Fusiform Face Area: A Module in Human Extrastriate Cortex Specialized for Face Perception." The paper (which has been cited more than 8000 times) claims to find higher signal activation in some tiny part of the brain when subjects were shown faces. At first glance Figure 3 of the paper looks a little impressive. We see a graph showing observations of faces involving about a 2% signal change, compared to 18 observations of non-faces involving only about a 1% signal change. Unfortunately the number of subjects used to produce these graphs was too small for a reliable result to be claimed -- only six subjects. Also, the paper makes no mention of any blinding protocol. So the people estimating the signal changes apparently knew whether a face had been observed or not, which could have biased their estimations. A paper like this should not be taken seriously unless the paper mentions how a blinding protocol was followed.

The paper "Place navigation impaired in rats with hippocampal lesions" has been cited more than 7000 times. The paper did not deserve such citation, because it failed to follow good research practices. The study group sizes were too small. The study group sizes consisted of 10, 13, 4 and 4 subjects. Each of the study group sizes should have been at least 15 for a somewhat persuasive result. It is very easy to get false alarm results using study group sizes smaller than 15.

The 2002 review article "Control of goal-directed and stimulus-driven attention in the brain" has been cited 12,792 times. Such a level of citation is very strange, because the article provides no strong evidence of neural correlates of goal-directed attention. The paper makes use of the very misleading visual representation technique so favored by neuroscientists, in which regions of the brain showing very slightly greater activation (such as 1 part in 200 or 1 part in 500) are shown in bright red or bright yellow, with all other brain regions looking grey. Such visuals tend to create the idea of a much higher activation difference than the data actually indicates.

A very misleading part of the article is Figure 3. We see a line graph in which the left side is labeled "fMRI signal," and we see variations from "0.05" to ".25," which a reader will typically interpret as being a 500% variation. The authors forgot to label this as a mere "percent signal change" variation. Instead of it being a 500% signal variation, it is a mere variation between .0005 and .0025, a fluctuation of less than 1 part in 300. Tracking down the source paper cited ("Neural systems for visual orienting and their relationships to spatial working memory," Figure 3), shows the correct labeling that used "percent signal change" to show that the reported variation was extremely slight. Why has a very slight variation been visually represented (in the "Control of goal-directed and stimulus-driven attention in the brain" paper) to make it look like some huge variation?

The 1998 paper "Neurogenesis in the adult human hippocampus" has got more than 8000 citations, according to Google Scholar. But a 2019 paper says this about adult neurogenesis (the formation of new neurons):

"Here we examine the evidence for adult human neurogenesis and note important limitations of the methodologies used to study it. A balanced review of the literature and evaluation of the data indicate that adult neurogenesis in human brain is improbable. In fact, in several high quality recent studies in adult human brain, unlike in adult brains of other species, neurogenesis was not detectable."

A year 2022 paper had a title of "Mounting evidence suggests human adult neurogenesis is unlikely."

Recently the interesting web site www.madinamerica.com (which specializes in a critique of psychiatry dogmas) had a post that mentioned an interesting case of a weak neuroscience paper that got very many citations:

"A highly publicized MD-candidate-gene link was put forward in a widely cited 2003 study by Avshalom Caspi and colleagues (according to Google Scholar, cited over 10,400 times as of August, 2022, or about 550 citations per year over 19 years), who concluded that people experiencing 'stressful life events' are more likely to be diagnosed with depression if they carried 5-HTTLPR, a variant genetic sequence within the SLC6A4 gene that encodes a protein that transports serotonin within neuronal cells. For many people, the Caspi study provided a sensible explanation for the causes of depression, where life events and genetic predisposition combined to explain why some people become depressed, while others do not. However, despite the publication of at least 450 research papers about this genetic variant, by 2018 or so it was clear that the 5-HTTLPR depression theory did not hold up. The rise and fall of the 5-HTTLPR-depression link was described in psychiatric drug researcher Derek Lowe’s aptly-titled 2019 Science article, “There Is No ‘Depression Gene.’” The depression candidate gene literature, he wrote, turned out to be 'all noise, all false positives, all junk.' ”

A psychiatrist commented on the mythology of 5-HTTLPR that arose:

"First, what bothers me isn’t just that people said 5-HTTLPR mattered and it didn’t. It’s that we built whole imaginary edifices, whole castles in the air on top of this idea of 5-HTTLPR mattering. We 'figured out' how 5-HTTLPR exerted its effects, what parts of the brain it was active in, what sorts of things it interacted with, how its effects were enhanced or suppressed by the effects of other imaginary depression genes. This isn’t just an explorer coming back from the Orient and claiming there are unicorns there. It’s the explorer describing the life cycle of unicorns, what unicorns eat, all the different subspecies of unicorn, which cuts of unicorn meat are tastiest, and a blow-by-blow account of a wrestling match between unicorns and Bigfoot."

A 2018 paper analyzing more than 1000 highly cited brain imaging papers found that they had a median sample size of only 12. In general, experimental studies that use study group sizes so small do not provide reliable evidence for anything. Study group sizes of at least 15 are needed for even a modestly persuasive result.

There is a standard way for a scientist to determine whether the study group sizes used in an experiment are sufficient to provide adequate statistical power. That way is to do what is called a sample size calculation (also called a power calculation or statistical power calculation). The 2018 paper mentioned above found that "only 4 of 131 papers in 2017 and 5 of 142 papers in 2018 had pre-study power calculations, most for single t-tests and correlations." This is a dismal finding suggesting that poor research habits in experimental neuroscience are more the rule than the exception.

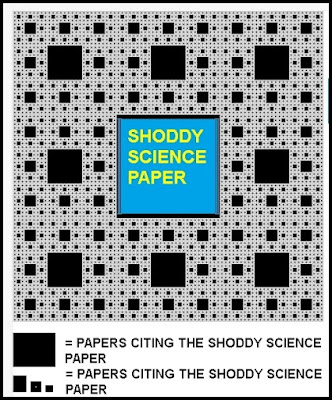

Besides a massive level of citation of weak and shoddy papers and papers describing studies using Questionable Research Practices, a gigantic problem in neuroscience literature is faulty citation. This is when a paper makes some statement, and includes a reference to some other paper to back up its claim. Very often when you track down the cited papers you will find that they did not make the claim being made in the paper making the citation, or failed to provide any robust evidence for such a claim. For example, you may see some science paper have a line like this:

"Research shows that Moravians are more likely to suffer memory problems.17"

But when you track down Reference # 17 listed at the end of the paper, you may find some paper that either does not make any such claim, or provides some research that comes nowhere close to showing such a claim.

A scientific paper entitled "Quotation errors in general science journals" tried to figure out how common such misleading citations are in science papers. It found that such erroneous citations are not at all rare. Examining 250 randomly selected citations, the paper found an error rate of 25%. We read the following:

"Throughout all the journals, 75% of the citations were Fully Substantiated. The remaining 25% of the citations contained errors. The least common type of error was Partial Substantiation, making up 14.5% of all errors. Citations that were completely Unsubstantiated made up a more substantial 33.9% of the total errors. However, most of the errors fell into the Impossible to Substantiate category."

When we multiply the 25% figure by 33.9%, we find that according to the study, 8% of citations in science papers are completely unsubstantiated. That is a stunning degree of error. We would perhaps expect such an error rate from careless high-school students, but not from careful scientists.

This 25% citation error rate found by the study is consistent with other studies on this topic. In the study we read this:

"In a sampling of 21 similar studies across many fields, total quotation error rates varied from 7.8% to 38.2% (with a mean of 22.4%) ...Furthermore, a meta-analysis of 28 quotation error studies in medical literature found an overall quotation error rate of 25.4% [1]. Therefore, the 25% overall quotation error rate of this study is consistent with the other studies."

In the paper we also read the following: "It has been argued through analysis of misprints that only about 20% of authors citing a paper have actually read the original." If this is true, we can get a better understanding of why so much misinformation is floating around in neuroscience papers. We repeatedly have paper authors spreading legends of scientific achievement, which are abetted by incorrect paper citations often made by authors who have not even read the papers they are citing.

An article at Vox.com suggests that scientists are just as likely to make citations to bad research that can't be replicated as they are to make citations to good research. We read the following:

"The researchers find that studies have about the same number of citations regardless of whether they replicated. If scientists are pretty good at predicting whether a paper replicates, how can it be the case that they are as likely to cite a bad paper as a good one? Menard theorizes that many scientists don’t thoroughly check — or even read — papers once published, expecting that if they’re peer-reviewed, they’re fine. Bad papers are published by a peer-review process that is not adequate to catch them — and once they’re published, they are not penalized for being bad papers."

The above suggests a good rule of thumb: when you read a science paper citing some other science paper, assume that it is as likely as not that the authors of the paper citing the other paper did not even read the other paper. Another good rule of thumb: be very skeptical of any claims you read in a neuroscience paper claiming that something is "well established" or "not controversial." Such claims are routinely made about things that have not been well established by observations.

No comments:

Post a Comment