There is a very great deal of junk science published by neuroscientists, along with much research that is sound. That so much junk science would appear is not surprising at all, given the research customs that prevail among neuroscientists.

Let us look at a hypothetical example of the type of junk science that so often appears. Let us imagine a scientist named Jack who wishes to show that a particular protein in the brain (let's call it the XYZ protein) is essential for memory. We can imagine Jack doing a series of experiments, each one taking one week of his time.

Jack thinks up a simple design for this experiment. Some mice will be genetically engineered so that they do not have the XYZ protein. Then the mice will be given a memory test. First, the mice will be placed in a cage, with a shock plate between the mouse and the cheese. When the mouse walks over the shock plate to go directly to the cheese, the mouse will be shocked. Later the mouse will be placed in the cage again. It will be recorded whether the mouse takes an indirect path to get the cheese (as if it remembered the previous shock it got on the shock plate), or whether the mouse just goes directly to the cheese (as if it did not remember the previous shock it got on the shock plate). The visual below shows the experiment:

Now, let us imagine that on Week 1 Jack does this experiment with 6 mice, and finds no difference between the behavior of the mice that had the XYZ protein, and those who do not. Jack may then write up these results as "Experiment #1," file the results in a folder marked "Experiment #1", and keep testing until he gets the results he is looking for.

Jack may then get some results such as the following:

Week 2: Mice without XYZ protein behave like those with it. Results filed as Experiment #2.

Week 3: Mice without XYZ protein behave like those with it. Results filed as Experiment #3.

Week 4: Mice without XYZ protein behave like those with it. Results filed as Experiment #4.

Week 5: Mice without XYZ protein behave like those with it. Results filed as Experiment #5.

Week 6: Mice without XYZ protein behave like those with it. Results filed as Experiment #6.

Week 7: Mice without XYZ protein behave like those with it. Results filed as Experiment #7.

Week 8: Mice without XYZ protein behave like those with it. Results filed as Experiment #8.

Week 9: Mice without XYZ protein behave like those with it. Results filed as Experiment #9.

Week 10: Mice without XYZ protein behave like those with it. Results filed as Experiment #10.

Week 11: Mice without XYZ protein behave like those with it. Results filed as Experiment #11.

Week 12: Mice without XYZ protein behave like those with it. Results filed as Experiment #12.

Week 13: Mice without XYZ protein behave like those with it. Results filed as Experiment #13.

Week 14: Mice without XYZ protein behave like those with it. Results filed as Experiment #14.

Week 15: Mice without XYZ protein behave like those with it. Results filed as Experiment #15.

Week 16: Mice without XYZ protein behave like those with it. Results filed as Experiment #16.

Then finally on Week 17, Jack may get the experimental result he was hoping for. On this week it may be that 5 out of 6 mice with the XYZ protein avoided the shock plate as if they were remembering well, but only 3 out of 6 mice without the XYZ protein avoided the shock plate as if they were remembering well. Is this evidence that the XYZ protein is needed for memory, or that removing it hurts memory? The result on Week 17 is no such thing. This is because Jack would expect to get such a result by chance, given his 17 weeks of experimentation.

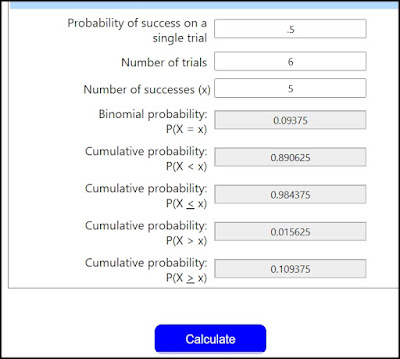

We can use a binomial probability calculator (like the

one at the Stat Trek site) to compute the probability of getting by chance 5 (or 6) out of 6 mice avoiding the shock plate, under the assumption that there was always 1 chance in 2 that a mouse would avoid the shock plate. The calculator tells us the chance of this is about 10 percent per experiment:

Since Jack has done this experiment 17 times, and since the chance of getting 5 out of 6 mice avoiding the shock plate by chance is about 10 percent, Jack should expect that at least one of these experiments would give the results he has got, even if the XYZ protein has nothing at all to do with memory.

But there is nothing in the research customs of neuroscience to prevent Jack from doing something that will give readers a wrong impression. Instead of doing a paper writing up all 17 weeks of his experimentation, Jack can produce a paper that writes up only week 17 of his research. The paper can then have a title such as "Memory is weakened when the XYZ protein is removed." We can imagine research standards that would prevent so misleading a paper, but such standards are not in place. Discussing only Week 17 of his research, Jack can claim to have reached a "statistically significant" result providing evidence that the XYZ protein plays a role in memory.

Two other customs aid very much accumulation of junk science:

(1) It is not customary in scientific papers to report the exact dates when data was collected. This makes it much harder to track down any cases in which an experimenter reports an experimental success during one data-gathering session, and fails to report failures in such experiments during other data gathering sessions.

(2) It is not customary in scientific papers to report the person who made a particular measurement or produced a particular statistical analysis or a particular graph. So we have no idea of how many hard-to-do-right scientific measurements (using very fancy equipment) were done by scientists-in-training (typically unnamed as paper authors) or by novice scientists who may have committed errors. And we have no idea of how many hard-to-do-right data analysis graphs were done by scientists-in-training or by novice scientists who may have committed errors.

Instead of such customs, it is a custom to always vaguely use a passive voice in experimenatal papers. So instead of a paper saying something like, "William Smith measured the XYZ protein levels in the five mice on January 3, 2020," our neuroscience papers are filled with statements such as "XYZ protein levels were measured in five mice" that fail to mention the person doing the measurement or when the measurement was done.

What kind of research customs would help prevent us from being misled by experimental papers so often? We can imagine some customs:

(1) There might be a custom for every research scientist to keep an online log of his research activities. Such a log would not only report what the scientist found on each day, but also what the scientist was looking for on any particular day. So whenever a scientist reported some experimental effect observed only on week 27 of a particular year, we could look at his log, and see whether he had unsuccessfully looked for such an effect in experiments on the five preceding weeks. Daily log reports would be made through some online software that did not allow the editing of reports on days after the report was submitted.

(2) There might be a custom that whenever a scientist reported some effect in a paper, he would be expected to fully report on each and every relevant experiment he had previously done that failed to find such an effect. So, for example, it would be a customary obligation for a scientist to make reports such as this whenver there were previous failures: "I may note that while this paper reports a statistically significant effect observed in data collected between June 1 and June 7, 2020, the same experimenter tried similar experiments on five previous weeks and did not find statistically significant effects during those weeks."

(3) It would be the custom to always report in a scientific paper the exact date when data was collected, so that the claims in scientific papers could be cross-checked with the online activity logs of research scientists.

(4) It would be the custom to always report in a scientific paper the exact person who made any measurement, and always report the exact person who made any statistical analysis or produced any graph, so that people could find cases when hard-to-do-right measurement and hard-to-get-right analysis was done by scientists-in-training and novice scientists.

(5) It would be a custom for studies to pre-register a hypothesis, a research plan and a data analysis plan, before any data was collected, which would help prevent scientists from being free to "slice and dice" data 100 different ways, looking for some "statistically significant" effect in twenty different places, a type of method that has a high chance of producing false alarms.

(6) It would be a custom for any scientific paper to quote the pre-registration statement that had been published online before any data was collected, so that people could compare such a statement with how the paper collected and analyzed data, and whether the effect reported matched the hypothesis that was supposed to be tested.

(7) Whenever any type of complex or subtle measurment was done, it would be a custom for a paper to tell us exactly what equipment was used, and exactly where the measurement was made (such as the electron microscope in Room 237 of the Jenkins Building of the Carter Science Center). This would allow identifications of measurements made through old or "bleeding edge" or poorly performing or unreliable equipment.

(8) Government funding for experimental neuroscience research would be solely or almost entirely given to pre-registered "guaranteed publication" studies, that would be guaranteed journal publication regardless of whether they produced null results, which would reduce the current "publication bias" effect by which null results are typically excluded from publication.

(9) Government funding would be denied to experimental neuroscience research that failed to meet standards for minimum study group sizes, greatly reducing all the "way-too-small-sample-size" studies. Journals would either deny publication to such "way-too-small-sample-size" studies or prominently flag them when they used such way-too-small study groups.

No such customs exist. Instead we have poor neuroscience research customs that guarantee an abundant yearly supply of shoddy papers.

Postscript: My discussion above is largely a discussion of what is called a file-drawer effect, in which wrong ideas arise because of a publication bias in which scientists write up only experiments that seem to show signs of an effect, leaving in their file drawers experiments that did not find such an effect. A

paper discusses how this file drawer effect can lead to false beliefs among scientists:

"Many of these concerns stem from a troublesome publication bias in which papers that reject the null hypothesis are accepted for publication at a much higher rate than those that do not. Demonstrating this effect, Sterling analyzed 362 papers published in major psychology journals between 1955 and 1956, noting that 97.3% of papers that used NHST rejected the null hypothesis. The high publication rates for papers that reject the null hypothesis contributes to a file drawer effect in which papers that fail to reject the null go unpublished because they are not written up, written up but not submitted, or submitted and rejected. Publication bias and the file drawer effect combine to propagate the dissemination and maintenance of false knowledge: through the file drawer effect, correct findings of no effect are unpublished and hidden from view; and through publication bias, a single incorrect chance finding (a 1:20 chance at α = .05, if the null hypothesis is true) can be published and become part of a discipline's wrong knowledge."

We can see how this may come into play with neuroscience research. For example, 19 out of 20 experiments may show no evidence of any increased brain activity during some act such as recall, thinking or recognition. Because of the file drawer effect and publication bias, we may learn only of the one study out of twenty that seemed to show such an effect, because of some weak correlation we would expect to get by chance in one out of twenty studies.

A web site describing the reproducibiity crisis in science mentions a person who was told of a neuroscience lab "where the standard operating mode was to run a permutation analysis by iteratively excluding data points to find the most significant result," and quotes that person saying that there was little difference between such an approach and just making up data out of thin air.