Nowadays university press releases are notorious for their brazen bunk. Authorship anonymity is a large factor that facilitates the appearance of misleading university and college press releases. University and college press releases typically appear without any person listed as the author. So when a lie occurs (as it very often does), you can never point the figure and identify one particular person who was lying. When PR men at universities are thinking to themselves "no one will blame me specifically if the press release has an error," they will feel more free to say misleading and untrue things that make unimpressive research sound important.

Misleading press releases produce an indirect financial benefit for the colleges and universities that release them. When there occurs untrue announcements of important research results, such press releases make the college and university sound like some place where important research is being done. The more such press releases appear, the more people will think that the college or university is worth the very high tuition fees it charges.

Judging from the quote below, it seems that science journalists often look down on the writers of university and college press releases, even though such science journalists very often uncritically parrot the claims of such people. In an Undark.org article we read this:

"Still, there are young science journalists who say they would rather be poor than write a press release. Kristin Hugo, for example, a 26-year-old graduate of Boston University’s science journalism program, refuses to step into a communications role with an institution, nonprofit or government agency. 'I’ve been lucky enough that I haven’t had to compromise my integrity. I really believe in being non-biased and non-partisan,' she says. 'I really, really, really want to continue that. I wouldn’t necessarily begrudge someone for going into [public relations] because there’s money in that, but I’d really like to stay out of it.' "

Let us look at the latest bad joke in the long-running comedy series entitled Neuroscience Press Releases. It is a press release with the untrue title "Neural Prosthetic Device Can Help Humans Restore Memory." We hear this untrue claim: "A team of scientists from Wake Forest University School of Medicine and the University of Southern California (USC) have demonstrated the first successful use of a neural prosthetic device to recall specific memories." Near the front of the press release is a reference to a scientific paper. The paper is a bottom-of-the-barrel kind of affair that fails to document any results greater than you should expect from mere chance, given several groups of researchers trying methods like the method tried, even if the methods all had zero effectiveness.

The paper (which can be read in full here) is entitled "Developing a hippocampal neural prosthetic to facilitate human memory encoding and recall of stimulus features and categories." In the "Main Results" section the paper states this about its tests with 14 subjects:

"Across all subjects, the stimulated trials exhibited significant changes in performance in 22.4% of patient and category combinations. Changes in performance were a combination of both increased memory performance and decreased memory performance, with increases in performance occurring at almost 2 to 1 relative to decreases in performance."

This does not sound like an impressive result at all. If I were to tell 14 people to guess whether it will be sunny or cloudy in particular states on the first of next month, and then I asked them to do the same task for the 15th of the month, while they were holding a rabbit's foot, I might easily find that the number of people who did better with the rabbit's foot was twice as high as the number of people who did worse. I might expect to get such a result with maybe a 25% probability.

There is a way to roughly estimate the probability of getting results like those reported in the paragraph above. We have a description of 14 people being tested, and almost twice as many getting an improvement compared to those getting a decline in performance. The probability of this happening by chance is roughly equivalent to the chance of 14 people flipping a coin, and nine of them or more getting heads, and five of them or fewer getting tails; for in that case the number of "good" results are twice as many as the number of "bad" results (if you consider "heads" as the good result). The Stat Trek binomial probability calculator screen below shows the odds of getting 9 or more "heads" results when flipping 14 coins. It is 21%. Because the quote above says "almost 2 to 1" rather than "more than 2 to 1," and 9 is more than twice as high as 4, we can slightly round up this probability of 21% to be a probability of about 25%.

So some neuroscientist reporting a result like the one mentioned above does nothing to show that anything more than chance was involved. What we should always remember is that neuroscientists are free to do multiple tests, and file away in their file drawers tests that fail completely, producing only results expected by mere chance. Also, there are many thousands of experimental neuroscientists who spend half of their time trying to produce impressive results; and very many journals have "publication bias" under which they only publish positive results. So we never should regard some result that could very easily have been produced by mere chance as a result telling us something about the brain or about the effectiveness of some medical intervention, particularly if such a result is not a well-replicated result. Getting something with a chance likelihood of about 25% is an utterly unimpressive result that does basically nothing to indicate that something more than chance was involved.

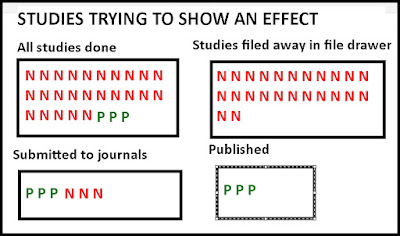

The diagram below illustrates why getting a result with a chance likelihood of about 25% does basically nothing to show that some medical device is effective. The red N letters are a negative results, and the green P's are a positive result. In the case shown below, reports of a positive effect get published, even though 9 out of 10 experiments failed to show the effect.

Figure 4 of the "Developing a hippocampal neural prosthetic..." paper gives us a "heat map" of the results, shown below. The gray squares represent cases of "no significant change." The yellow, orange, brown and purple squares represent a negative change in memory performance. The blue squares represent a positive change in memory performance. No one making a brief study of the chart below will notice any difference. The amount of negative change seems like about the same as the amount of positive change.

The authors then confess to us about the miserably bad statistical power of their results. The "rule of thumb" used in experimental studies is that to reach a statistical power considered "good," you need to get what is called a statistical power of at least 80%. But the authors confess their results have a statistical power of only 10%.

Such results have no persuasive power. They are results that are not good evidence for anything. The authors give this pitiful-sounding bit of excuse making for their poor results:

"With respect to the low overall statistical power, achieving high power would require an extremely large number of trials, which would take over an order of magnitude more time with a patient than what is possible in a clinical setting due to the time allotted for experiments. Within the clinical restrictions, and without causing undue fatigue on the patients, this experiment has run the maximum number of trials that was able to be performed with each patient. This is a restriction that is inherent with working with not only a patient population, but also the low number of overall trials compliant with a memory task (e.g., Suthana et al., 2012; Ezzyat et al., 2018) required to perform these types of experiments. Further, due to the relatively small nature of the patient population undergoing procedures which require the implantation of neural electrodes, this study, with an n of 14, has a relatively large patient population compared to many published neurostimulation papers. The low statistical power and limited patient group is therefore not unique to our lab or experiment, but also applies to other research that has been published in this area."

The excuses are not valid. If the device they created had worked, you would not have needed to run ten times as many trials on the 14 subjects used. In such a case good statistical power would have been demonstrated with the same number of trials. As for the last sentence, it is equivalent to saying, "Yes, our results are pitiful, but all the other experiments like ours produce results equally pitiful."

Now, there's nothing wrong with producing an unsuccessful study that fails to produce any decent evidence of memory improvement by electronic means. The only sin is if you boast about such a result, and produce an untrue press release shamelessly making the false claim that you have produced a device that can "help humans restore memory." And that is exactly what Wake Forest University School of Medicine did. They have described a very unconvincing experimental result as evidence that someone created a device that can "help humans restore memory." It's just another example of what goes on every day in the world of science news: colleges, universities and other institutions making false boasts about achieving scientific goals they did not actually achieve. The epidemic of misleading statements in university press releases is an epidemic that continues to rage full blast, and you will find some of its worst examples in press releases about biology and medicine.

If I were to do a visual giving a proper metaphor for the type of boasting that went on in the press release described above, the kitten would have to be so small you couldn't even recognize it as a kitten.

Postscript: The tendency of university press releases to make groundless boasts about biology and medicine accomplishments is also shown in a recent press release entitled "Research team uncovers universal code driving the formation of all cell membranes." We have a press release with what sounds like some runaway groundless boasting:

"The theory describes how membranes are compartmentalized, remodeled and regulated, and provides a basis for understanding fundamental questions such as how life is formed at conception, how viruses invade cells and how neurons send signals for feeling, thought and action....'We feel that this represents a conceptual revolution that is akin to the discovery of the genetic code,' says Overduin. 'We're the first to see the overall forest from the trees.... It's remarkable that an undergraduate student can come up with a new code that turns biology upside down and allows us to make sense of it in a radically new way.' "

This all seems very much like groundless boasting, and a look at the scientific paper provides no evidence that anything new has been discovered. Finding a new code used by cells comparable to the genetic code would involve discovering some new repository of information that used repeated symbolic tokens in a systematic way, involving some scheme of representation comparable to the Morse Code or the genetic code. The authors prevent no evidence they have discovered any such thing. All we have in the paper is some very woolly speculation not well-supported by any observations. The press release statements sound like runaway illusions of grandeur not justified by any observations.

But that's how university press releases are these days. The rule is: "anything goes" when it comes to boasting.

No comments:

Post a Comment