In the year 2003 the United States launched an unprovoked invasion of Iraq. The US tried to get the United Nations Security Council to approve its invasion, but failed. Although ruled by a very bad man (Saddam Hussein), there was no justification in international law for the invasion. Far from threatening the United States at the time the invasion was launched, Iraq was allowing weapons inspectors a free run of the country in the months before the invasion. The US claimed that the invasion was justified because Iraq had weapons of mass destruction. No such weapons showed up in searches of the country following its invasion and occupation by the United States.

To help get compliant journalists who would write favorable stories, the US military used techniques that encouraged what were known as embedded journalists. An embedded journalist was one who would long live with and follow particular military units that entered Iraq. This would encourage a kind of bonding and brotherhood that would be most likely to result in favorable stories with a title such as "The Heroes That I Rode With Into Baghdad" rather than stories questioning the United States' unprovoked invasion of Iraq. Have a man wear the uniform of the 1st Infantry Division and have him drive around with other soldiers in an armored personnel carrier of the 1st Infantry Division, and you will be likely to get someone reporting favorably on the activities of the 1st Infantry Division, particularly if such a division does its nastiest work when embedded journalists are not around.

In the world of science journalism, we sometimes see the "embedded journalist" technique being used. To help get favorable news accounts of extremely dubious and poorly designed brain scan experiments, some neuroscientists seem to be encouraging or allowing science journalists to participate in such experiments, giving them experiences just like the subjects of such experiments. So we have articles such as the recent Scientific American article "Brain Waves Synchronize When People Interact." The piece is an example of a science journalist writing a long article that fails to critically examine extremely weak and poorly supported claims. Giving us no references to back up such claims, the author states this:

"An early, consistent finding is that when people converse or share an experience, their brain waves synchronize. Neurons in corresponding locations of the different brains fire at the same time, creating matching patterns, like dancers moving together."

There is no robust evidence for such claims. Neurons fire almost constantly in all parts of the brain. So any one looking for neurons firing at the same time in the corresponding parts of two different brains will always be able to find both parts firing at the same time. There is no good evidence for any synchronization resembling dancers moving together to perform the same choreography.

The science journalist writing the story has acted as an "embedded journalist." She has undergone a medically unnecessary hour-long brain scan as part of her story about other people undergoing medically unnecessary brain scans as part of a science experiment. She tells us that a neuroscientist "had enlisted" her in this "pioneering study." They have actually been doing similar studies for a long time. We hear a neuroscientist named Wheatley make this untrue, nonsensical claim:

"When we're talking to each other, we kind of create a single uberbrain that isn't reducible to the sum of its parts. Like oxygen and hydrogen combine to make water, it creates something special that isn't reducible to oxygen and hydrogen independently."

No, when I talk to some stranger, that does not create some single brain, and it isn't anything whatsoever like oxygen and hydrogen combining to make water. Our science journalist lets this very silly statement get by without questioning it. She states the following about the aftermath of her brain scan:

"The researchers will compare the activity in my and Sid's brains, and the brains of all the other pairs in the study, second by second, voxel by voxel, over the course of our storytelling session, looking for signs of coherence..Such studies take time, but in a year or so, if all goes according to plan, they will publish their first results."

Gee, that sounds very much like the scientists will be eagerly seeking correlations, spending a whole lot of time slicing and dicing data, until some little bit of "coherence" can be squeezed out somewhere. It sounds like what would be going on in if scientists took 10,000 photos of clouds in New York and Shanghai, and then eagerly studied them, trying to look for some correlation between clouds in the same time over New York and Shanghai.

The process of looking for similar brain wave patterns or brain activity patterns in two different people being scanned is called "hyperscanning" or a search for "hyper-connectivity." A 2013 scientific paper on hyperscanning ("On the interpretation of synchronization in EEG hyperscanning studies: a cautionary note") found this:

"To conclude, existing measures of hyper-connectivity are biased and prone to detect coupling where none exists. In particular, spurious hyper-connections are likely to be found whenever any difference between experimental conditions induces systematic changes in the rhythmicity of the EEG. These spurious hyper-connections are not Type-1 errors and cannot be controlled statistically."

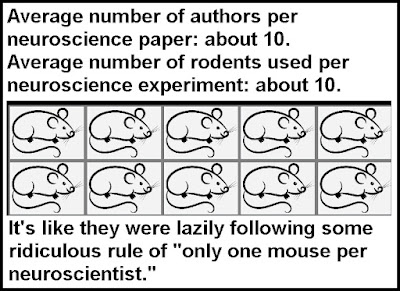

A meta-analysis of hyperscanning studies reveal that more than 80 percent of them consisted of only two subjects. Two subjects is way too small a study group size for a robust result. An informal rule of thumb is that for any correlation-seeking study a minimum of 15 subjects per study group is needed for anything that might be a reliable result (and in most cases the minimum study group size is even larger). Almost every hyperscanning study is very guilty of Questionable Research Practices because of the grossly inadequate study group sizes used.

If our Scientific American journalist had not been "embedded" as part of a study, she might have been more likely to have critically covered the very dubious research involved. The journalist appeals to the faddish concept of "interbrain synchrony." A 2022 paper is entitled "Interbrain synchrony: on wavy ground." Noting the fad-like nature of the research (by using the terms "in recent years" and "by storm"), the paper states this:

"In recent years the study of dynamic, between-brain coupling mechanisms has taken social neuroscience by storm. In particular, interbrain synchrony (IBS) is a putative neural mechanism said to promote social interactions by enabling the functional integration of multiple brains. In this article, I argue that this research is beset with three pervasive and interrelated problems. First, the field lacks a widely accepted definition of IBS. Second, IBS wants for theories that can guide the design and interpretation of experiments. Third, a potpourri of tasks and empirical methods permits undue flexibility when testing the hypothesis. These factors synergistically undermine IBS [interbrain synchronicity] as a theoretical construct."

The paper is behind a paywall, but I can make a good guess of what the author is referring to by mentioning "a potpourri of tasks and empirical methods permits undue flexibility when testing the hypothesis." I would guess he's talking about the fact that "hyperscanning" studies or studies of "interbrain synchrony" are typically not pre-registered studies committing themselves to one particular way in which a synchrony of brains will be measured and analyzed. Free to use any of 1001 statistical measures while trying to show some brain synchrony, it is hardly surprising that some success will be reported. When you are free to keep torturing the data until it confesses, you will get a few confessions.

Yesterday at the Mad in America site we had a long article by University of Pennsylvania neuroscience professor Peter Sterling that presents an indictment of some poorly established, fad-centered work in neuroscience. Here are a few interesting quotes:

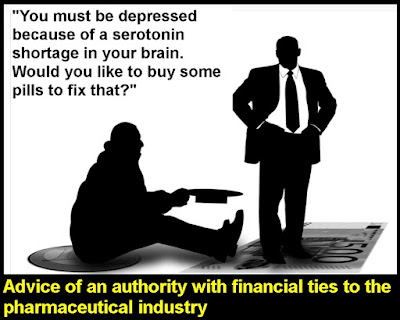

"Here I comment on the 2022 review, 'Causal Mapping of Human Brain Function' by Siddiqi et al., which appeared in Nature Reviews Neuroscience....To be clear: there is no neuroscience to suggest that any mental function would be improved by ablating or stimulating a particular structure in the prefrontal cortex or its associated subcortical regions. To the contrary, what we know of the intrinsic organization of the prefrontal cortex suggests that mucking with it is unlikely to help. Any suggestion to the contrary is simply wild speculation. The Review tries to justify its story by claiming efficacious results. But I have heard these claims before, and they never check out. They’re just a succession of Ponzi schemes, as here recounted.... A new generation of psychosurgeons has been emboldened. Moreover, they are supported by prominent journals that publish the same old single-case reports that claim good results, even when casual inspection indicates they are clearly not good....Why does Frontiers publish this atrocious nonsense? I believe that it represents deep corruption that is creeping back into neuroscience—as will be further noted below.... Here is the lead example cited for efficacy: '16 patients were randomized into a two-week crossover period…During the crossover phase, the mean difference in Y-BOCS score was 8.3 points (P = 0.004); that is, an improvement of 25%'. Yikes! 25% improvement for 2 weeks?? Nature Medicine??....The lead author acknowledges membership on advisory boards and speaker’s honoraria from Medtronic, Boston Scientific, and Abbott, with a long list of similar 'competing interests' for many of the other contributors. For Nature Medicine to publish such pitifully weak findings by authors with a financial stake in the industry strikes me as more evidence for a true 'crisis': rising collusion between Big Scientific Publishing and Big Pharma/Medical Electronics...Despite efforts to find 'biomarkers' for mental disturbance, they remain elusive (Insel, 2022). Two recent large-scale neuroimaging studies find no evidence to distinguish depressed from normal individuals or even to distinguish the two populations (reviewed in Sterling, 2022). This suggests that the Review’s claims to identify regions of interest in depression, including subcategories, and 'criminal behavior' and 'free will' are artifactual. Such claims emerge inevitably in small studies: the message from neuroscience needs to go out: distrust small studies....The neuroscience community has been powerfully corrupted by dreams of glory and gelt."