When neuroscientists are interviewed, we never seem to get interviewers asking the kind of questions they should ask of people who claim to know very huge and grand things but who do not have observations or reasoning backing up such boasts. Why is it that people interviewing neuroscientists always seem to pitch only the softest of softball questions? It's rather like the situation imagined below:

An example of such an interview is the recent Quanta Magazine interview of neuroscientist Anil Seth. Seth has some book he is promoting, one apparently claiming to offer some explanation of minds. The book has the pretentious title Being You: A New Science of Consciousness. But in the interview Seth does not sound like anyone who has any credible idea of how to explain human minds. What we get is the most vacuous hand-waving, combined with circuitous not-very-relevant digressions. Seth has a chance here to persuade us that he has something like some kind of substantial idea of how to explain minds. He completely fails at such a thing, leaving us with the impression of someone empty-handed when it comes to mind explanation.

Early in the article, Seth starts out by making a groundless triumphal boast he does nothing to substantiate. He says this:

“I always get a little annoyed when I read people saying things like, ‘Chalmers proposed the hard problem 25 years ago’ … and then saying, 25 years later, that ‘we’ve learned nothing about this; we’re still completely in the dark, we’ve made no progress,’” said Seth. “All this is nonsense. We’ve made a huge amount of progress.”

No, neuroscientists have not done anything to credibly explain human minds; and we have no examples from Seth of progress that was made.

We then have Seth and his interviewer engaging in the vapid word trick of consciousness shadow-speaking. The trick involves acting as if there is a mere "problem of consciousness" rather than an almost infinitely greater problem of explaining human minds. A human being is not merely "some consciousness." A human being is an enormously complex reality, and the mental reality is as complex as the physical reality. You dehumanize and degrade human beings when you refer to their minds as mere "consciousness." The problem of human mentality is the problem of credibly explaining the thirty or forty most interesting types of human mental experiences, human mental characteristics and human mental capabilities. Instead of just being "some consciousness," human minds have a vast variety of mental capabilities and mental experiences such as these:

- imagination

- self-hood

- abstract idea creation

- appreciation

- memory formation

- moral thinking and moral behavior

- instantaneous memory recall

- instantaneous creation of permanent new memories

- memory persistence for as long as 50 years or more

- emotions

- desire

- speaking in a language

- understanding spoken language

- creativity

- insight

- beliefs

- pleasure

- pain

- reading ability

- writing ability

- mental illness of many different types

- ordinary awareness of surroundings

- visual perception

- recognition

- planning ability

- auditory perception

- attention

- fascination and interest

- the correct recall of large bodies of sequential information (such as when someone playing Hamlet recalls all his lines correctly)

- eyes-closed visualization

- extrasensory perception (ESP)

- dreaming

- pattern recognition

- social abilities

- spirituality

- philosophical reasoning

- mathematical ability

- volition

- trance phenomena

- exceptional memory such as hyperthymesia

- extraordinary calculation abilities such as in autistic savants

- out-of-body experiences

- apparition sightings

It is always a stupid trick when someone tries to reduce so complex a reality to try and make it sound like the faintest shadow of what it is, by speaking as if there is a mere "problem of consciousness," and talking as if humans are just "some consciousness" that needs to be explained. Such a trick (which can be called consciousness shadow-speaking) is as silly as ignoring the vast complexity of the organization of the human body, and speaking as if explaining the origin of human bodies is just a task of explaining how there might occur "some carbon concentrations."

After some dehumanizing nonsense in which Seth describes humans as machines or animals, Seth offers this utterly vacuous attempt at mind explanation:

"I eventually get to the point that consciousness is not there in spite of our nature as flesh-and-blood machines, as Descartes might have said; rather, it’s because of this nature. It is because we are flesh-and-blood living machines that our experiences of the world and of 'self' arise."

Nothing of any substance is said here, and we have three clues that the speaker has gone way, way wrong. The first is the dehumanizing nonsense of referring to humans as machines. The second is the denialist nonsense of referring to the human self in quotation marks, as if Seth does not believe such a thing exists. Then there's the ridiculous idea that describing a human as a machine could somehow shed light on why humans are conscious. Machines are not conscious.

Humans are not animals, and the habit of calling humans animals is a senseless and morally harmful speech custom of biologists, who should have classified the human species in a separate human kingdom rather than classifying the human species in an animal kingdom. Humans are not machines, and human bodies are not machines. One of the many differences between a machine and a human body is that machines do not continually replace their constituent components, but human bodies and human brain continually replace their protein parts. I can understand why Seth would be inclined to forget about human brains constantly replacing their protein parts, because it is one of many facts that discredit the claims of people such as Seth that the brain is the storage place of memories. The average lifetime of brain proteins is roughly 1000 times shorter than the maximum length of time that humans can remember things (more than 50 years). You wouldn't remember anything more than a few weeks if your memories were stored in your brain.

Next in the Quanta Magazine interview we have a mention of IIT theory (integrated information theory), and Seth says that he finds "bits of IIT promising, but not others." He says, "The parts of IIT that I find less promising are where it claims that integrated information actually is consciousness." Seth wastes several paragraphs of his interview talking about some IIT theory that he does not endorse, so that part of the interview does nothing to help Seth give the reader the impression that he has any explanation for human minds.

We then have Seth talking for three paragraphs about a "free energy principle." Filled with circumlocutions, the discussion sounds like irrelevant hand-waving, and sounds like nothing bearing any relevance to an explanation of minds. Seth then proceeds to state a false-as-false-can-be misstatement that makes him sound like a poor scholar of biology. He says, " Historically, there is a commonality between the apparent mysteries of 'life' and of 'heat,' which is that both eventually went away — but they went away in different ways."

Nothing could be more false than the claim that the mysteries of life "went away." The more scientists have learned about the vast levels of organization and the stratospheric heights of functional complexity and information-rich interdependence in living things, the greater the mysteries of life have grown. Scientists have no credible explanation for the origin of life. Scientists have no credible explanation for the origin of the protein molecules or the cells in human bodies. Scientists cannot even explain how a human cell is able to reproduce. The progression from a speck-sized zygote to the state of vast hierarchical organization that is the human body is a miracle of organization a thousand miles over the heads of scientists. Claims that such a progression occurs by a reading of a body blueprint in DNA are false -- DNA does not have any specification of how to build a human body or any of its organs or any of its cells.

When we come to human minds, the mysteries of life are endless. Neuroscientists lack any credible explanation for learning, the lifelong preservation of memories, the ability of humans to instantly recall, and the ability of humans to think, imagine and believe. When neuroscientists try to give explanations for such things, they give us sound bites that are the vaguest hand-waving, sound bites such as "synapse strengthening" which cannot be the correct explanation, given how humans can form memories instantly (much faster than synapses can strengthen), and given how unstable synapses are (consisting of proteins with an average lifetime of only a few weeks or less).

Seth then proceeds to make this false-as-false-can-be claim: "The problem of life wasn’t solved; it was 'dissolved.' " No such thing occurred. There are still innumerable gigantic unsolved and unanswered problems of biology. The more we have learned about the facts of biology and the sky-high levels of organization, fine-tuning and information-rich functional complexity everywhere in human bodies, the bigger the explanatory problems have grown.

Seth makes a very misguided attempt to insinuate that the problem of explaining minds may be solved like the problem of heat was solved. In the nineteenth century scientists did not understand heat, and thought that maybe heat was some substance (called caloric) which existed more abundantly the hotter things were. Later this idea was discarded, and scientists started to understand that heat is just a measure of the speed at which molecules in some object are moving. But there is no reason to think that such a thing has any relevance to explaining minds. Heat was always a very simple property, something that can be expressed by a single number (a temperature). But a mind is not a property. A mind is a reality of very great diverse complexity, a wealth of very different and diverse capabilities and vastly varying experiences, something vastly too complex and diverse to ever be expressed by a single number. And heat is a physical thing, but a mind is not a physical thing.

There's another reason why it is senseless for Seth to try to insinuate that the explanation of heat has some relevance to an explanation of minds. Heat was explained by an explanation of molecule motion, the idea that heat is really how fast molecules are moving around in a substance. But the neurons which Seth thinks are an explanation for minds are not things in motion. Neurons are attached to dendrites in a way that makes each neuron as immobile as a tree.

Then we have this little bit of hand-waving vacuity from Seth:

"Here, it’s going to be a case of saying consciousness is not this one big, scary mystery, for which we need to find a humdinger eureka moment of a solution. Rather, being conscious, much like being alive, has many different properties that will express in different ways among different people, among different species, among different systems. And by accounting for each of these properties in terms of things happening in brains and bodies, the mystery of consciousness may dissolve too."

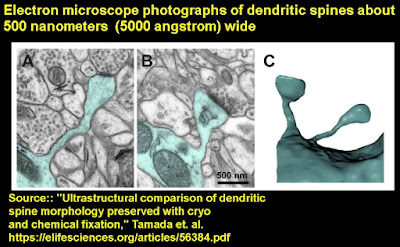

There's no explanation there, just a promissory note that has no credibility, combined with misleading language about properties. Brains have been analyzed to death, and brain tissue has been exhaustively examined with the most powerful microscopes capable of photographing the tiniest brain structures. But no one has found in brains anything that can explain the capabilities of minds. There's no reason to think that further analysis of brains will do anything to explain minds. Also, a human mind is not some mere set of properties, but something enormously more than that. The human mind is largely an enormously impressive set of capabilities and experiences. You can't explain capabilities and experiences by merely explaining properties. A property is some simple aspect of a physical thing that can be expressed with a single number, something like height, mass, width, depth or volume. There is no possible set of property explanations that can add up to be an explanation of a human mind, or even a tenth of an explanation of a human mind.

Earlier in the interview Seth has made this gigantically misleading statement: " Life is a constellation concept — a cluster of related properties that come together in different ways in different organisms." No, physically speaking, a human body is something enormously more than some mere "cluster of related properties." A human body is state of enormously organized matter, purposefully arranged in an accidentally unachievable manner to perform enormously complex and hard-to-achieve functions such as respiration, blood circulation, locomotion, visual perception and digestion. Depicting a human body as a "cluster of related properties" is as misleading as depicting an aircraft carrier as a "cluster of related properties."

The interviewer asks about Seth's goofy claim that perception is a "controlled hallucination" and Seth wanders in circles for four paragraphs without ever justifying this bizarre phrase. The interview then ends with Seth addressing questions about whether machines will ever be conscious, but not in any way that persuades us that he has any explanation for human minds.

The interview leaves us with the impression that neuroscientist Anil Seth is quite empty-handed in regard to explaining minds. Seth went round and round in circles, engaging in the most vacuous circumlocutions. Nothing that he has said gives us any good reason for suspecting that he has any real understanding of how minds arise or how humans have the mental capabilities that they have. Seth's interview reminds me of what a dead end is the brain explanation for human minds.

You won't get anything any better if you read the prologue of Seth's new book, along with about 25 pages of the book, available on Google Books using the link here. In those pages, Seth sounds every bit as empty-handed as a mind explainer as he does in the Quanta Magazine interview, and seems to tell us nothing of any value in explaining human minds or human mental phenomena. He nonsensically promises on pages 7 to 8 that by the end of the book you'll understand your consciousness is a "controlled hallucination." That's a very silly thing to say, given that hallucinations are sensory experiences of things that are not really there, and that our normal conscious experience is of seeing things that are there.

It seems there is also basically "no there there" in Seth's 25-page paper "Being a beast machine: the somatic basis of selfhood." It seems like a vacuous empty-handed running-around-in-circles mess of doubletalk, gobbledygook and jargon. In the abstract Seth says "we describe embodied selfhood in terms of ‘instrumental interoceptive inference,' " an utterance that makes no sense given that 99% of the time you are a self, you are not making any inferences. Inference is a relatively rare activity of a human mind. Things are not at all clarified when Seth later tells us that "instrumental interoceptive (active) inference involves descending interoceptive predictions being transcribed into physiological homeostasis by engaging autonomic reflex arcs." The lack of a "somatic basis of selfhood" is shown by out-of-body experiences (experienced by a significant fraction of the population) in which human selves vividly observe their bodies from outside of their bodies, such as two meters away, something that would never occur if your body was the basis of your self.

The paper's claim that "the brain embodies and deploys a generative model which encodes prior beliefs" is without any foundation in neuroscience observations. No one has ever found any evidence of models or beliefs stored in a brain, and no one has any detailed credible theory of how a belief could ever be stored or encoded in a brain or maintained for decades in a brain that undergoes such very high molecular turnover and synaptic turnover. The observational neuroscience here is so nonexistent that while scientists have a word ("engram") for the unwarranted speculative idea of memories stored in brains, neuroscientists do not even have a word meaning a place where a brain stores a belief.

Part of what's going on is that Seth seems to be using the illegitimate trick of describing a self that has sensory experiences, and then trying to suggest that such sensory experiences explain a self. The fallacy is that self-hood occurs just fine without such sensory experiences. People my age often lie awake in bed at night for an hour or more, in silent darkness, with only negligible sensory experiences. During such an hour my self-hood is still going full blast, as I think, remember, plan and engage in self-evaluation, insight and introspection. Sensory experiences are things that occur to selves, but not any explanation for self-hood itself. Self-hood is something we should never expect to occur from brains. There is no reason why chemical or electrical activity from a set of billions of neurons connected by unreliably transmitting synapses would ever give rise to a unified sense of self, just as there is no reason why 10 million named Smith scattered around the world would ever have a unified identify as a single Smith presence in the world.

(1) What causes daylight on planet Evercloudy?

(2) How is it that planet Evercloudy stays warm enough for life to exist?

Having no knowledge of their sun, the correct top-down explanation for these phenomena, the scientists on planet Evercloudy would probably come up with very wrong answers. They would probably speculate that daylight and planetary warmth are bottom-up effects. They might spin all kinds of speculations such as hypothesizing that daylight comes from photon emissions of rocks and dirt, and that their planet was warm because of heat bubbling up from the hot center of their planet. By issuing such unjustified speculations, such scientists would be like the scientists on our planet who wrongly think that life and mind can be explained as bottom-up effects bubbling up from molecules.

Facts on planet Evercloudy would present very strong reasons for rejecting such attempts to explain daylight and warm temperatures on planet Evercloudy as bottom-up effects. For one thing, there would be the fact of nightfall, which could not easily be reconciled with any such explanations. Then there would be the fact that the dirt and rocks at the feet below the scientists of Evercloudy would be cold, not warm as would be true if such a bottom-up theory of daylight and planetary warmth were correct. But we can easily believe that the scientists on planet Evercloudy would just ignore such facts, just as scientists on our planet ignore a huge number of facts arguing against their claims of a bottom-up explanation for life and mind (facts such as the fact that people still think well when you remove half of their brains in hemispherectomy operations, the fact that the proteins in synapses have very short lifetimes, and the fact that the human body contains no blueprint or recipe for making a human, DNA being no such thing).

We can imagine someone trying to tell the truth to the scientists on planet Evercloudy:

Contrarian: 'You have got it very wrong. The daylight on our planet and the warmth on our planet are not at all bottom-up effects bubbling up from under our feet. Daylight and warmth on our planet can only be top-down effects, coming from some mysterious unseen reality beyond the matter of our planet.'

Evercloudy scientist: 'Nonsense! A good scientist never postulates things beyond the clouds. Such metaphysical ideas are the realm of religion, not science. We can never observe what is beyond the clouds.'

Just as the phenomena of daylight and planetary warmth on planet Evercloudy could never credibly be explained as bottom-up effects, but could only be credibly explained as top-down effects coming from some mysterious reality unknown to the scientists of Evercloudy, the phenomena of life and mind on planet Earth can never be credibly explained as bottom-up effects coming from mere molecules, but may be credibly explained as top-down effects coming from some mysterious unknown reality that is the ultimate source of life and mind."